What Is Extract Transform Load?

- LINCS Project

- October 27, 2021

— Justin Francis, LINCS Junior Programmer —

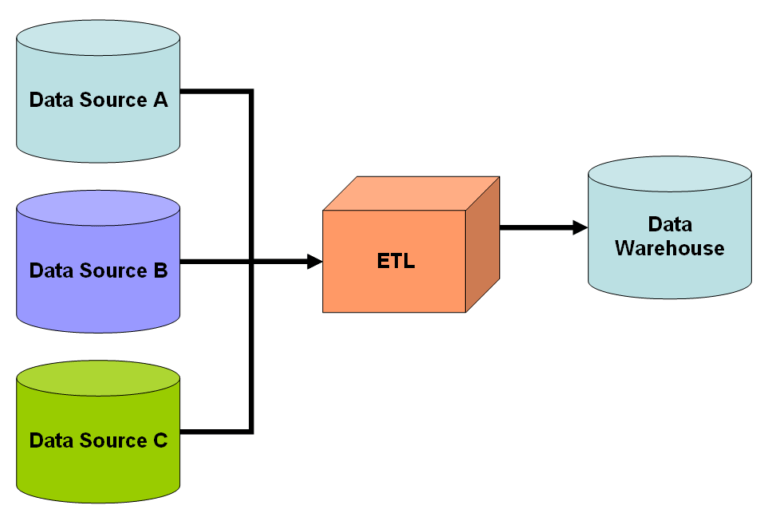

In data science there is a commonly used process called extract-transform-load (ETL). ETL involves three main steps:

- 1. Extract data from a source,

- 2. Transform the data via data cleansing and data manipulation, and

- 3. Load the transformed data to a data warehouse (the final collection of data). (Sethi 2018)

Before having much experience in data processing, my colleague Devon and I were not sure what ETL was. Now, after jointly transforming an entire dataset from XML to a whole new structure utilizing RDF triples using the CIDOC CRM ontology we’re beginning to understand the process. For the bulk of the transform process we used the tool Memory Mapping Manager (3M) as it performed most of the transformations we needed out of the box.

Why use an ETL Pipeline?

The first question is why did we need to use an ETL process on our data? In our case, LINCS is working with many datasets in many different formats, including relational data, TSVs, XML, and others, each structured according to the conventions of the researchers providing the data. In particular, for our task we needed to convert XML data, formatted according to the Yellow Nineties specification, to CIDOC CRM, so that we would ultimately have a common data model with shared vocabularies that are interlinked. With this done, researchers will be able to learn new things from the connected datasets. In the blog post “Why LINCS?” Susan Brown explains the benefits of connected datasets: they join together “related stuff from different websites so that humans and machines can talk about the same things in the same terms, and can advance knowledge and debate about those things collaboratively.”

How do you start an ETL pipeline?

One could write an entire software stack to do the transform job, but more often (especially on open-source projects), the first step is to look for an existing tool. This requires a lot more research than one may initially anticipate. A couple key questions to ask when researching tools are:

- Will the tool do exactly what you need it to do? If not, can you modify it to do this?

- What language is the tool written in? Does it have a simple front end user interface?

- Is there robust documentation on how to use the tool?

- Does its license suit your needs? Is it actively maintained and/or monitored?

For the most part, 3M answered yes to all these questions for LINCS. It was able to transform a complete dataset from XML to CIDOC CRM, almost right out of the box.

What is an ETL pipeline capable of?

The advantages of developing an ETL pipeline are similar to the advantages of Object Oriented Programming (OOP). With 3M, as soon as we changed the input data, the output automatically updated. This meant that we could easily configure many aspects of the transform process. For instance, one modification can change the template for auto-generated labels, which means we can easily transform the entire dataset with new labels. 3M’s front end has a layer of abstraction where the inner workings are hidden, which makes it an easy tool for us to understand.

Final Considerations

3M was not able to do everything we needed, so in some cases we wrote our own code to transform our data before loading into 3M. In particular, 3M could only extract information from one entity’s set of triples at a time, so we needed to use XPATH to add information to one entity using information from another. When writing this code we had to keep in mind another core concept of an ETL pipeline: scalability. The dataset we converted is not the only XML dataset that will be converted, which means that the entire process has to be able to transform new data without making any serious changes.

Following some of the core concepts of an ETL pipeline, we have hopefully made preparing the data for that final load as automated as possible and therefore easier for future researchers.

In another blog post Devon discusses how 3M addresses the challenges surrounding the labour involved in the data conversion process.

Works Cited

Diljeet Singh Sethi, “Understanding Extract, Transform and Load (ETL) in Data Analytics world with an example in Python Code” DIY for Teens, July 8, 2018. https://medium.datadriveninvestor.com/understanding-extract-transform-and-load-etl-and-its-necessity-in-data-analytics-world-with-an-64346016153d.