Ice cream, Binaries, and Maybes

- LINCS Project

- April 25, 2023

— Jingyi Long, LINCS undergraduate research assistant —

In my first meeting this summer as a data science research assistant, we each followed our personal introductions with declarations of our favourite ice cream flavours. Mine was and continues to be Häagen Dazs’ Strawberry Cheesecake ice cream, and I was pleasantly surprised to learn someone else on the team felt the same. However, the biggest surprise was learning that someone enjoyed microwaving their ice cream to change its texture. At that point is it still ice cream? Is it soup? Or a milkshake? What even counts as ice cream?

Shelving those questions, I dove head first into a different, non-dairy, world—the world of knowledge organization systems. I was swimming in a sea of acronyms (RDF, SKOS, OWL, CWRC, and TTL to name a few) and organizational structures. I was busy understanding how humans could use organization systems and languages developed for the sole purpose of knowledge representation to wrangle computers to view the world in human-made categories.

Spoiler alert—it’s messy. But first, a mini etymology lesson.

Ontology comes from the Greek words onto (being) and logia (study), effectively meaning the study of being. Ontologists question how things are grouped into categories, which categories are at the topmost level, and how these categories inform the classification of everything. In information science and digital humanities, an ontology refers to a model of the broadest categories in a specific knowledge set. While ontologies feature generic concepts, they do not include specifics—those are left to vocabularies. Vocabularies expand on the generic concepts in ontologies by giving them contexts and meanings.

For instance, an ontology of desserts may include a class of dairy desserts with a subclass for ice cream. However, ice cream flavours may be omitted from the ontology due to their specificity; instead, they may appear in a vocabulary about desserts. May is the key word in this example. The creator of the ontology may decide strawberry cheesecake ice cream is important enough to add to the ontology and so other flavours should be there too.

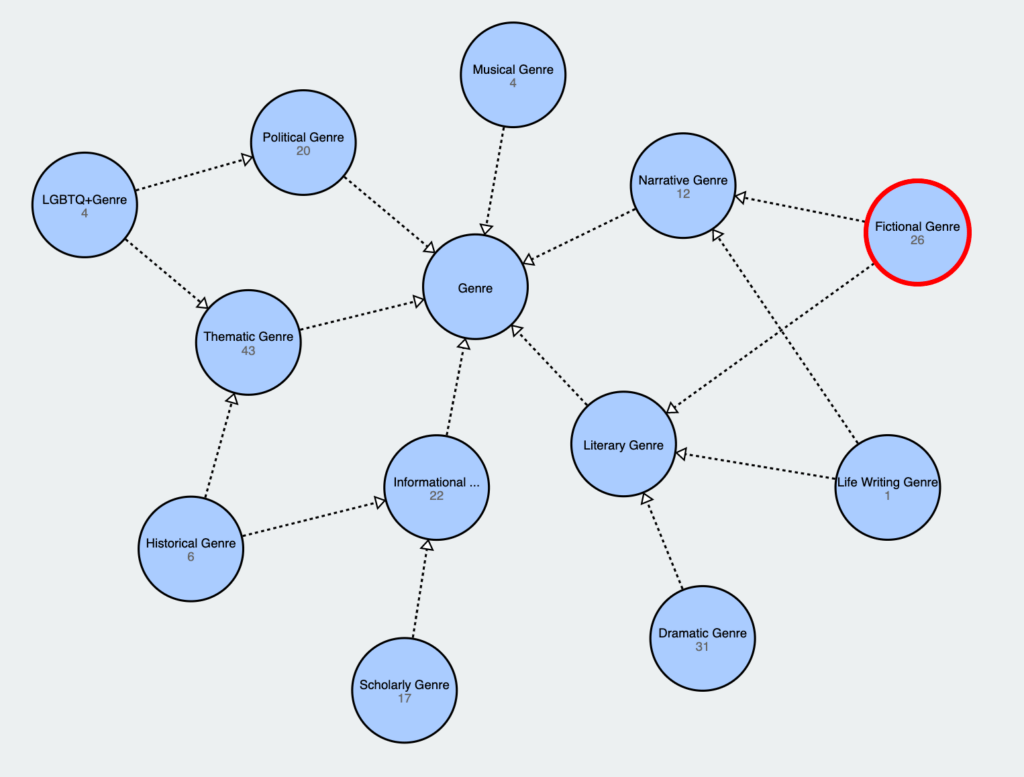

May kept on popping up not only while I thought about ice cream but also as I navigated LINCS’s ontologies and vocabularies. One of my main roles this summer was to implement changes made in the ontologies to the vocabularies as well. This included fixing redundant relationships in the Genre vocabulary such as feminist theory being declared as being part of the philosophical and scholarly genre when the philosophical genre was in fact a subset of the scholarly genre. As a result, I became well versed in the ins and outs of the CWRC, Genre, and Illness & Injuries vocabularies.

A quick aside before I explain about the much-dreaded may. The ontologies and vocabularies I worked with were not drawn on a whiteboard where I could erase concepts and add connections between classes as I pleased. Instead, they lived on the web where they were encoded using semantic languages and structures such as OWL, RDFS, and SKOS. Computers thrive on binaries where a decision flips a switch on or off; ambiguity stalls the system as machines cannot infer implied meaning like a human brain. Semantic models such as ontologies and vocabularies therefore try to replicate methods of typing and categorizing that seem like second nature to us for computers.

So to recap: computers operate in binaries but we do not. The entirety of the web’s data is stored in ones and zeroes, but our brains see the world in much more complex, nuanced ways.

While ontologies and vocabularies may seem similar (they both help categorize things), ontologies are strict in how concepts can be grouped since they use formal logic. A vocabulary, in comparison, doesn’t claim that ice cream is a dessert—it only helps describe concepts, such as the many decadent ice cream flavours. Writing it like this makes it seem intuitive that logic doesn’t apply to vocabularies, or as W3.org writes:

[Some Knowledge Organization Systems] are, by design, not intended to represent a logical view of their domain. Converting such KOS to a formal logic-based representation may, in practice, involve changes which result in a representation that no longer meets the originally intended purpose.

It’s true that hindsight is 20/20 because while it seems obvious now, I spent countless hours agonizing over conceptual hierarchies in the vocabularies that just did not make logical sense. The fictional genre may fall under the literary genre, but it also may fall under the narrative genre. If I was asked this question out of nowhere, I would ask why not both, but I was so keyed into the binary thinking of computers that I felt blindsided when I saw the fictional genre as an offshoot of the literary and narrative genre.

It was easy to fall into the pattern of thinking where a concept was either A or B, especially when the categories were already in front of me. It was harder to recognize that things in real life are much more complicated and nuanced than a coded system could neatly categorize.

I spent so much of the summer trying to understand how the world could fit into neat little boxes that I forgot about the human aspect of categorization–the messiness and maybes that often comes with making decisions. While the polished world of ontologies and vocabularies may seem far removed from everyday life, it took me a better part of the summer to remember that they’re based on human-made categories—categories whose boundaries can shift over time and vary by user.

That’s all to say that by the end of the summer I had an answer to my burning questions from the start of May: it’s all subjective! This may be a non-answer in the binary yes-or-no sense, but as long as there is some sort of consensus (whether between two people or millions), the fate of melted ice cream, like vocabularies, can exist in the gap between absolutes.